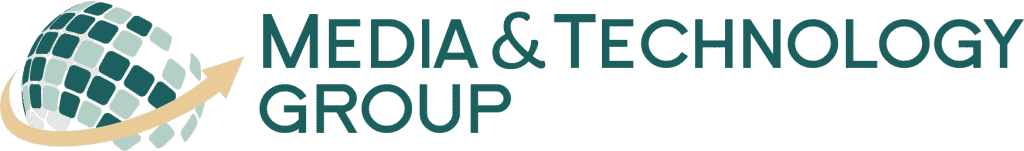

As technology rapidly advances, AI autonomous weapons ethics are increasingly discussed around the world. This topic doesn’t just concern scientists or military strategists. It touches upon moral boundaries and societal values, topics that everyone should care about. What happens when machines can decide life-and-death situations? Let’s delve into this complex issue together.

Understanding Autonomous Weapons

Firstly, what are autonomous weapons? These are machines equipped with artificial intelligence that can select and engage targets without human intervention. These AI systems are trained to execute missions by themselves, using data and algorithms to make judgments on the battlefield. While this might sound like a sci-fi movie, it’s an emerging reality.

At Media & Technology Group, LLC, where we specialize in AI implementation and technical project management, understanding these technologies is crucial. But beyond the tech, the conversation often leads us to a critical question: Should machines be allowed to make decisions that were once the sole domain of humans?

The Ethical Dilemmas

When we talk about AI in warfare, several ethical dilemmas come to mind. A key concern is accountability. Who’s responsible if an autonomous weapon makes a mistake? It’s challenging to place blame on a machine. Is it the programmer, the commander, or the manufacturer?

Moreover, machines lack human judgment and empathy. They assess situations based on data and algorithms, but not morality. This can lead to decisions that humans would find unacceptable. Thus, the ethical problems are not just hypothetical; they’re very real.

Balancing Innovation with Responsibility

Many of us at Media & Technology Group, LLC, spend our time balancing innovative technology with ethical responsibility. We offer AI consulting services and recognize that our advanced tech needs to align with societal values. How do we ensure that our AI systems serve humanity and not inadvertently harm it?

To address this, international treaties and guidelines could serve as frameworks. Nations need to work together to set standards that govern the use of autonomous weapons. A practical approach involves rigorous testing and verification to prevent misuse or failure.

Moving Forward: What Can We Do?

The conversation about AI autonomous weapons ethics needs collective engagement. We, as members of a global community, should urge policymakers to take these discussions seriously. Encouraging public debates can lead to more informed decisions. Transparency is key in managing the evolution of warfare technologies.

- Educating the public on ethical AI dilemmas can help create awareness.

- Participating in forums and discussions to voice your concerns.

- Supporting ethical AI development through stringent regulations.

In conclusion, as AI systems become more integrated into our daily lives, it is essential to navigate their ethical implications carefully. Media & Technology Group, LLC, is committed to addressing these questions as we advance in AI technologies. We must ensure that the tech we build today respects the values we treasure and safeguards the future we hope to create.

This blog post provides an informative overview of the ethical considerations surrounding AI autonomous weapons, appealing to a broad audience and aligning with the expertise of Media & Technology Group, LLC. It balances technical insights with human concerns, reflecting both innovation and responsibility.